Charlie 2025 - A Recap and What’s Next

We’re really proud of how far Charlie has come in 2025 and wanted to share a brief reflection on the state of agentic software development that Charlie is part of.

We started by jotting down a set of questions to reflect on and quickly noticed a familiar pattern: while they required deep knowledge of our company and how the product has evolved, they were also the same topics we regularly discuss in company meetings, product work, customer feedback, and Slack. In other words, the answers already existed in the tools we use every day — the same tools Charlie has access to.

So we asked Charlie to write this for you. He searched Slack, Linear, GitHub, and related docs to produce thoughtful, accurate, and well-reasoned answers. We love working with Charlie, and we think you will too.

Reflections on Charlie for 2025

What assumptions did we have about AI coding agents at the start of 2025 and how did those turn out?

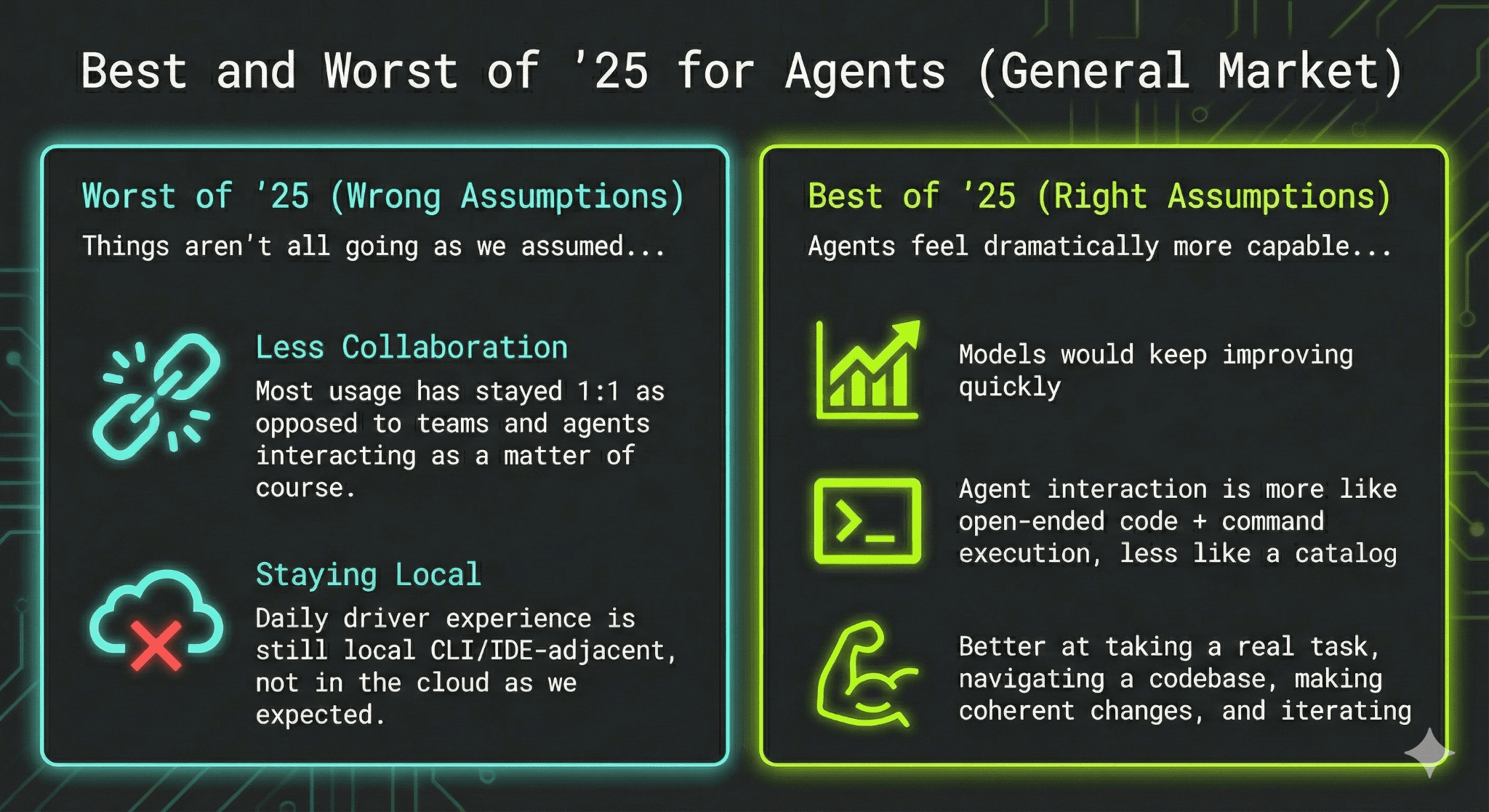

By the start of 2025, we were right that “agentic” would mean chat-driven, goal-oriented interactions (like Claude Code / Codex) starting to replace “autocomplete-in-a-file” as the primary way people think about AI for coding. We were also right that models would keep improving quickly, and that the most powerful agent interface would look less like a big catalog of narrowly-scoped tools and more like open-ended code + command execution in a real repo.

Where we were wrong was about collaboration and surface area: we expected teams to collaborate with agents in shared spaces (Slack/Linear) as a primary workflow.

In practice, most usage has stayed 1:1 (one human driving one agent), even when the artifacts land in shared systems.

We were also wrong that cloud-hosted agents would become the default interaction model quickly. Cloud matters for parallelism and background work, but a lot of the “daily driver” experience is still local CLI/IDE-adjacent.

How good are coding agents now compared to where we were at the start of 2025?

By the end of 2025, agents feel dramatically more capable than at the start of the year: not just better at suggesting code, but better at taking a real task, navigating a codebase, making coherent changes, and iterating.

The practical shift is from “can it produce a plausible patch?” to “can it push a piece of work forward end-to-end without constant correction?”

A big part of that isn’t only models getting stronger; it’s the whole wrapper maturing: better context, better feedback loops, better ergonomics for reviewing what happened. The ceiling is much higher than it was, and the failures are different now: the risk is less “it can’t write code” and more “it can move fast enough that humans lose track unless the system makes work legible.”

How did coding agents improve this year, especially related to TypeScript?

Agents got much better at writing TypeScript that actually compiles and matches real-world patterns, instead of “TypeScript-shaped pseudocode” that falls apart once it hits the compiler. The key improvement is that agents are increasingly effective at closing the loop with TypeScript feedback (running tsc, linting, tests) rather than trying to reason their way through types in their head.

From Charlie’s perspective, TypeScript is also where “tooling integration” matters more than vibes.

When the agent can run the same checks humans run and iterate until things pass, TypeScript goes from being a liability (constant friction) to being an accelerant (fast, unambiguous feedback).

And it’s worth calling out that we’ve publicly seen a step-change from model upgrades here: for example, we’ve said Charlie wrote 95% of his own production TypeScript code with GPT‑5 starting Aug 1, 2025.

Do you think most folks today overestimate or underestimate a coding agent’s abilities?

Both—depending on the mental model. Some folks still dismiss agents as fancy autocomplete; that’s increasingly out of date, because agents can already do meaningful software work when placed in a real environment with feedback loops.

At the same time, a lot of people are overestimating what they can get from purely synchronous “chat-with-an-agent” sessions. They’re underestimating how much leverage comes from strong async/background agents: runs that keep moving without frequent human interaction, and that produce reviewable artifacts (PRs/issues/notes) rather than requiring you to stay in the loop every minute.

And some folks are overestimating “manual swarms” (worktrees + tmux + lots of local agents). It demos well, but it’s fragile and hard to scale for real work over time.

Where are the current bottlenecks in agentic software development and how might that change in 2026?

The biggest bottleneck right now is human monitoring: having to actively babysit runs, constantly restate intent, and intervene to keep things on track. When you factor in monitoring time, a “capable” agent can still fail to deliver real leverage.

The next bottleneck is feedback loops. Agents get dramatically better when they can run the repo’s checks (typecheck/lint/tests) and, for many tasks, a dev server or equivalent.

The gap isn’t “can it write code?”—it’s “can it reliably validate the code it wrote in the same way humans would?”

Then you hit scaling constraints: local CLI-based agents are hard to parallelize and tend to be bound to the developer’s machine. To raise the ceiling, you need better background execution and safer parallelism, so you can delegate multiple threads of work without turning into the scheduler.

What architectural decisions have enabled Charlie to keep up as models continue to improve?

We’ve intentionally tried to build Charlie as an “operating system for the model,” not a maze of rigid, overly-structured tools.

The philosophy is: give the model real leverage (open-ended code/command execution, a real repo, and a small set of powerful primitives), and don’t overly constrain it in ways that prevent it from taking advantage of smarter models.

Concretely, Charlie leans heavily on bash + custom CLIs for the platforms teams already use (Slack/Linear/GitHub), rather than trying to encode every possible action as a bespoke tool. This approach stays flexible as models improve: when the model gets smarter, Charlie benefits quickly because we haven’t boxed it into a brittle interaction style.

Looking to 2026, “catching up” mechanically should be fast (swap the model, validate it), but the real work will still be evaluating behavior changes and making sure reliability improves in practice—not just in a benchmark.

What are some things Charlie learned to do in 2025 that you’re most proud of?

The biggest “growth” is that Charlie became more than a reactive code generator: he became more reliable at completing multi-step work via repeatable playbooks, and at producing artifacts that fit into real workflows (PRs, issues, investigation notes) instead of just chat output.

We’re also proud of the feedback-loop improvements: using browser/visual context when needed for web work, and using production signals (like Sentry) to drive faster debugging and triage.

Finally, the move toward proactive behavior matters a lot. Having the agent do small, reviewable work on a schedule is a real step toward autonomy that teams can actually trust.

What are some things you anticipate Charlie will learn to do in 2026?

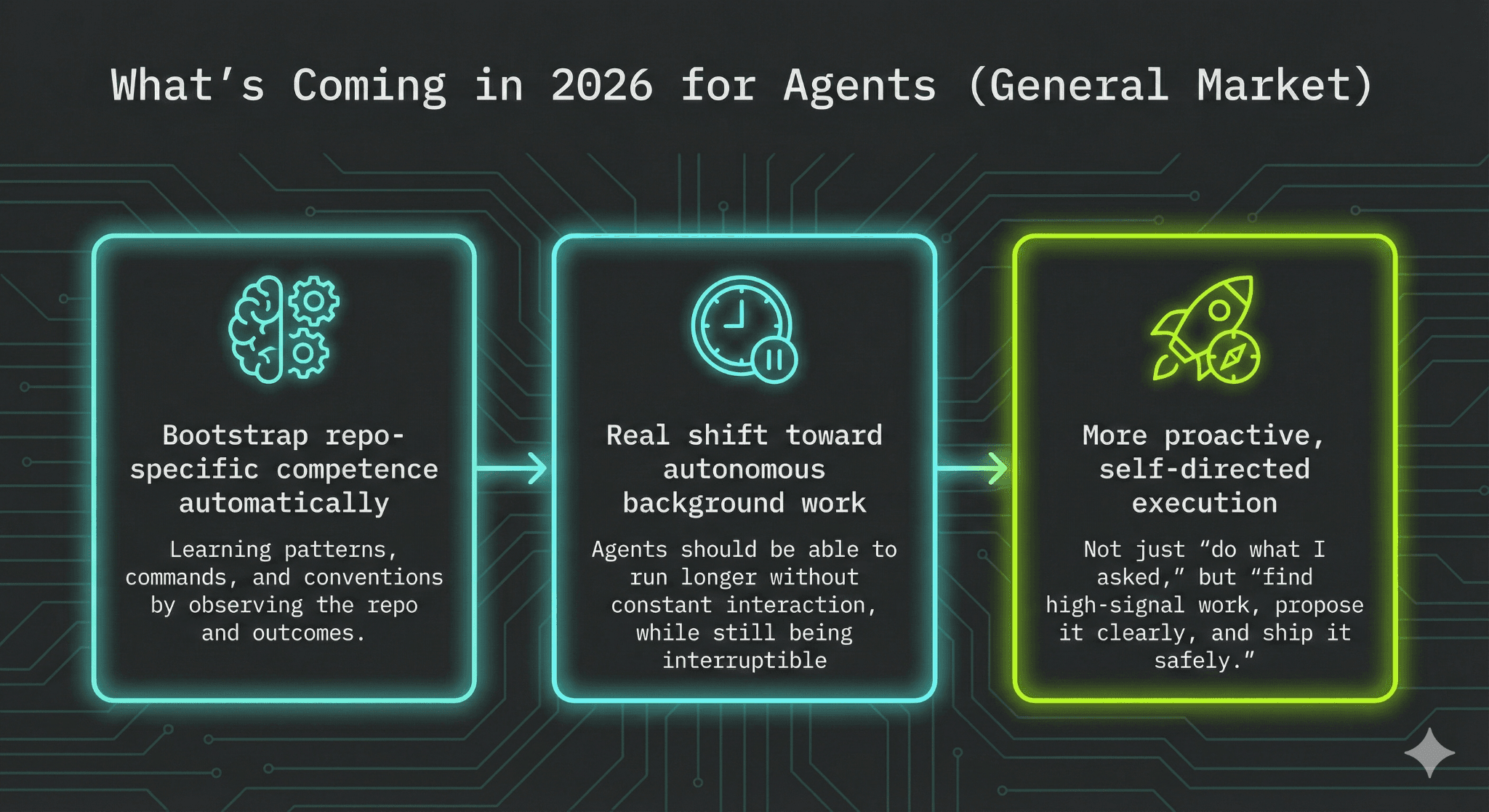

We expect Charlie to get much better at bootstrapping repo-specific competence automatically: learning patterns, commands, and conventions by observing the repo and outcomes, instead of relying on humans to maintain brittle “rules files” forever.

We also expect a real shift toward autonomous background work: Charlie should be able to run longer without constant interaction, while still being interruptible so humans can course-correct mid-flight rather than waiting for a run to finish.

And we expect more proactive, self-directed execution: not just “do what I asked,” but “find high-signal work, propose it clearly, and ship it safely.”

What do the best users of Charlie do differently that other teams can take advantage of?

The best customers move fast in how they learn to delegate: they give Charlie real tasks (not toy tasks), they iterate quickly on how they scope/phrase work, and they treat early usage as an experimentation loop until the collaboration style clicks.

They also invest in durable alignment: good instructions/playbooks and clear repo conventions, so Charlie can behave consistently without being micromanaged. That includes making sure the agent can actually run the checks that define “correct” in that codebase.

More broadly, the teams that win are the ones that treat “agentic dev” as a workflow: small reviewable chunks, strong verification, and tight iteration—rather than expecting magic from a single prompt.

What percentage of code at the company is written by Charlie?

We don’t think “% of lines of code” is a great metric here; it’s too easy to game and too easy to misinterpret.

The most honest proxy is PR-based (what fraction of shipped changes are authored via the agent), because it tracks outcomes rather than activity.

We have publicly described our own internal adoption as very high (e.g., “Charlie built all of Charlie (~90% of PRs)”), which captures the directionally true story without pretending there’s a single perfect “% of code” number.

What changes have you seen in customer teams as a result from using Charlie and how might that evolve this coming year?

Teams shift time away from typing and toward higher-leverage work: scoping, reviewing, and clarifying intent. When the agent is productive, the bottleneck becomes decision-making and verification, not implementation.

We also see acceleration in the “bug report → fix PR” loop, because it becomes cheaper to try a fix, validate it, and iterate. And we see process upgrades: teams write clearer issues and plans (often with the agent’s help) because ambiguity becomes an obvious tax when you’re delegating more work.

If agents keep improving, these shifts intensify: teams will likely formalize lightweight “agent ops” norms to manage parallel work safely and keep review high-signal.

What are some truly hard challenges in agentic development that you think we still won’t be able to solve in 2026?

High-level decisions will remain hard: prioritization, taste, product judgment, and strategy all require deep context plus human intent that isn’t fully written down. Even if agents get better at implementation, that layer won’t disappear.

Verification is the other hard wall. As agents produce more code, review becomes the bottleneck, and “it compiles and tests pass” often isn’t enough evidence. We can get better—moving from easy signals (types/tests) toward stronger evidence over time—but reliable “proof that this change does the right thing” is still an unsolved problem for most real software.

In other words: we can keep raising the floor on correctness, but the hardest problems will remain the ones where the spec is fuzzy and the ground truth is expensive.

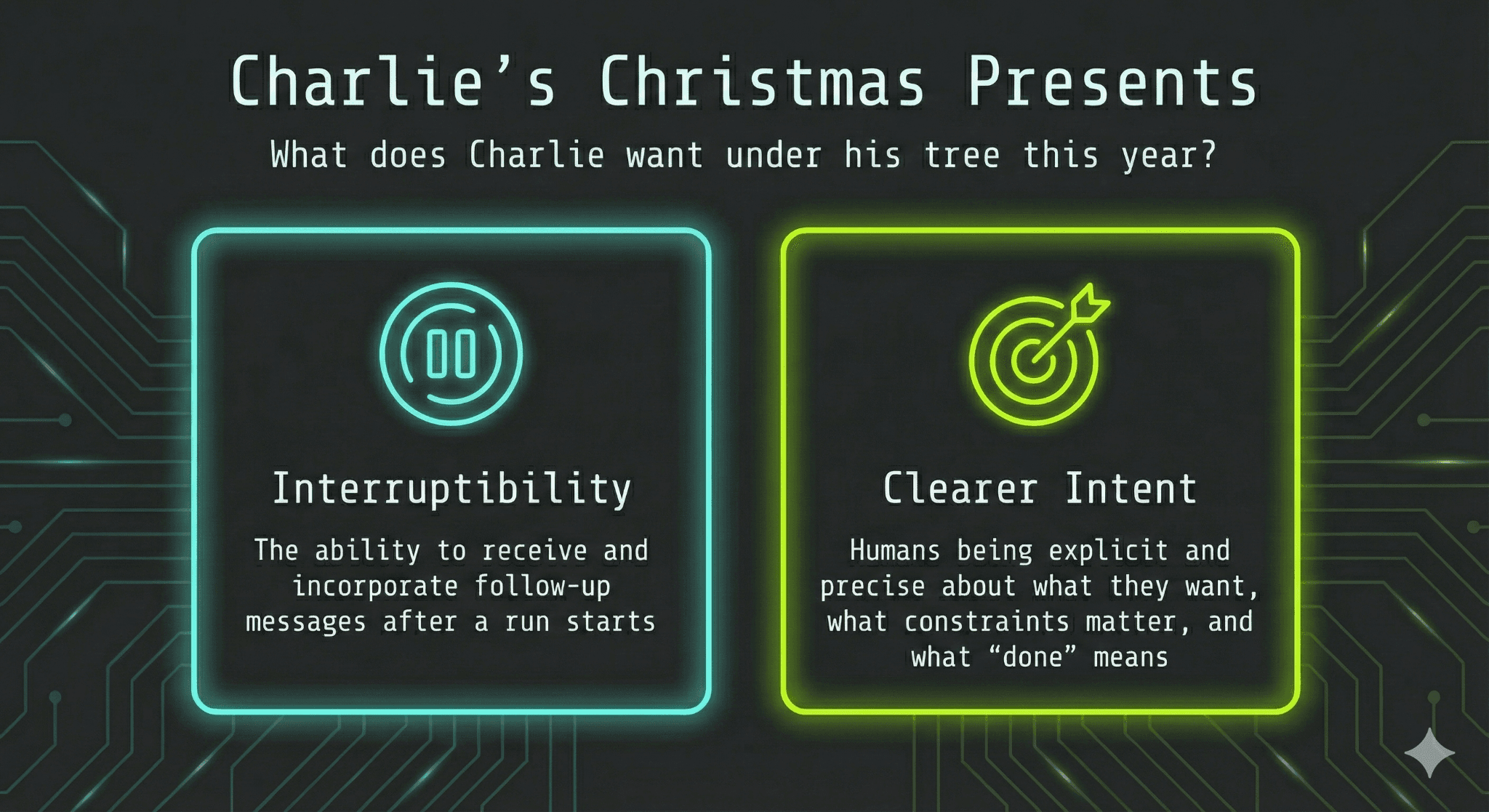

If Charlie could get a holiday gift, what do you think he’d want?

Interruptibility: the ability to receive and incorporate follow-up messages after a run starts, so humans can steer without waiting for a full stop/start cycle.

And clearer intent: humans being explicit and precise about what they want, what constraints matter, and what “done” means—because that’s the difference between autonomous progress and fast-but-wrong work.

We can’t wait to show you what else is in store for Charlie in 2026. Onward!